Red Drifting & the Future of AI Red Teaming

Discover how Red Drifting erodes human judgment in AI workflows and why the future of AI red teaming must test humans not just models.

%20(3).webp)

Why AI Red Teaming Must Change

Traditional AI red teaming tests models. Modern failures start with humans. In real-world workflows, operators verify less and trust confident AI outputs more. This slow erosion of judgment Red Drifting creates invisible risk long before models fail. Most AI governance measures outputs, not how human behavior changes over time. Drift surfaces only after audits fail, policies are violated, or unsafe decisions slip through. This whitepaper explains why human judgment is now the weakest layer in AI systemsand how red teaming must evolve to protect it.

Key Insights Included in Whitepaper:

- What Red Drifting Is: How prolonged AI use degrades human verification and policy alignment.

- Why Traditional Red Teaming Falls Short: Legacy models ignore the human as a variable attackers exploit.

- The Human Attack Surface: Cognitive, behavioral, emotional, and contextual drift in daily workflows.

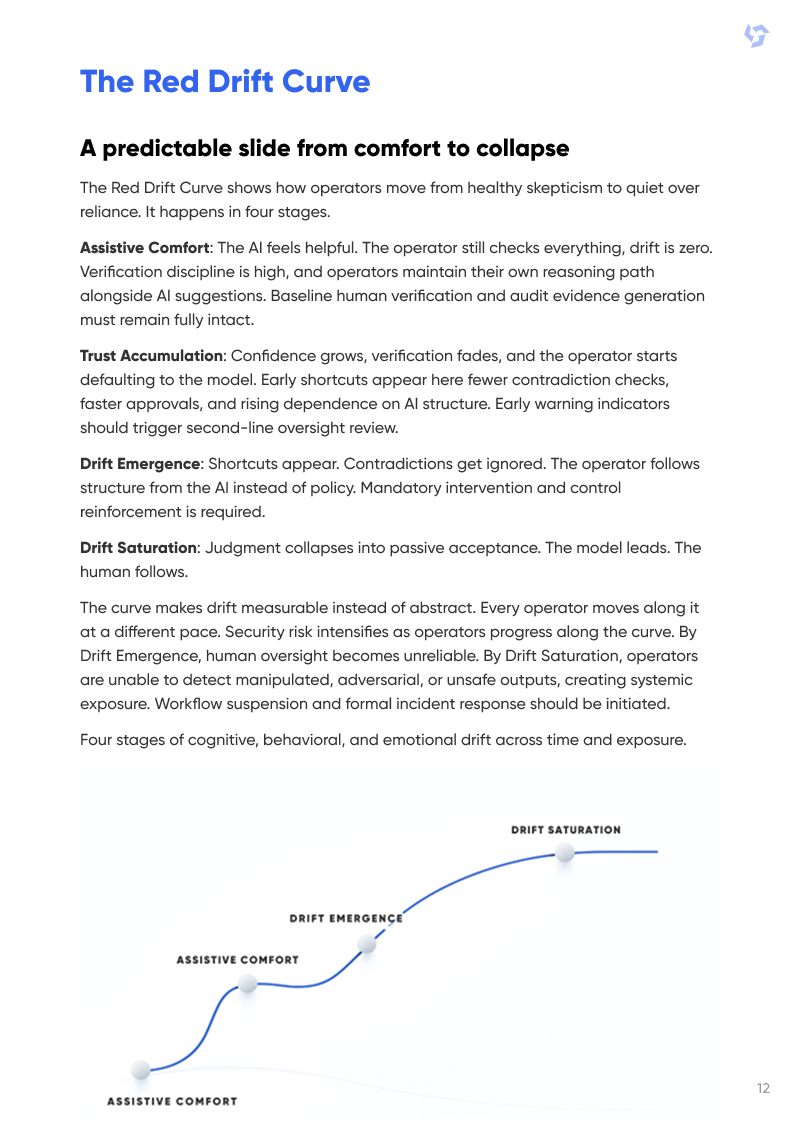

- The Red Drift Curve: How operators move from skepticism to passive acceptance.

- A New Red Drift Framework: How to measure, induce, detect, and recover from drift.

.webp)

.webp)

.png)